Test Driven Development in the Era of ChatGPT (Part 1)

The recent massive leap in Large Language Model (LLM)-aided Natural Language Generation (NLG) models like ChatGPT has disrupted general software development code such as REST APIs and database operations. Software developers now crave these shiny AI tools to get their job done. They will ask to write the code and also the tests. Some ambitious managers think they could resolve their painful developer resourcing issues by using such tools. Also, some software sales folks will make wishful commitments for products and features by thinking ChatGPT would sort them out.

So What’s the Problem?

One common issue that makes users unhappy and produces waste is developing software without correctly understanding the problem. Imagine if a less experienced or excited developer uses a ChatGPT-like tool and completes a user story. This could be the typical sequence of actions;

- Get that refined user story assigned. It could be an ugly Jira ticket.

- Prompt ChatGPT with the problem and copy the generated code. Probably, without even reading the generated hints.

- Paste the code and possibly do some refactoring or try to retrofit.

- Ah… then realise there are no tests, go back to ChatGPT and ask to write some tests.

- For simple tasks, mostly, it’s job done.

- Tests pass, the build is green, IDE and coverage tools are happy

- The developer feels it’s fun, saves loads of time, and will continue.

These models are stochastic by nature. This means generated code could be different each time.

Yann LeCun, One of the pioneers in the field of AI, highlights that LLMs do not have the right cognitive structure to achieve Artificial General Intelligence (AGI). They make factual, logical errors, diverge from the correct tokens path and have limited reasoning.

Also, as OpenAI says, they could hallucinate, but for a non-expert user, the output could look plausible. In addition, developers will end up leaking their organisation’s intellectual property into the world.

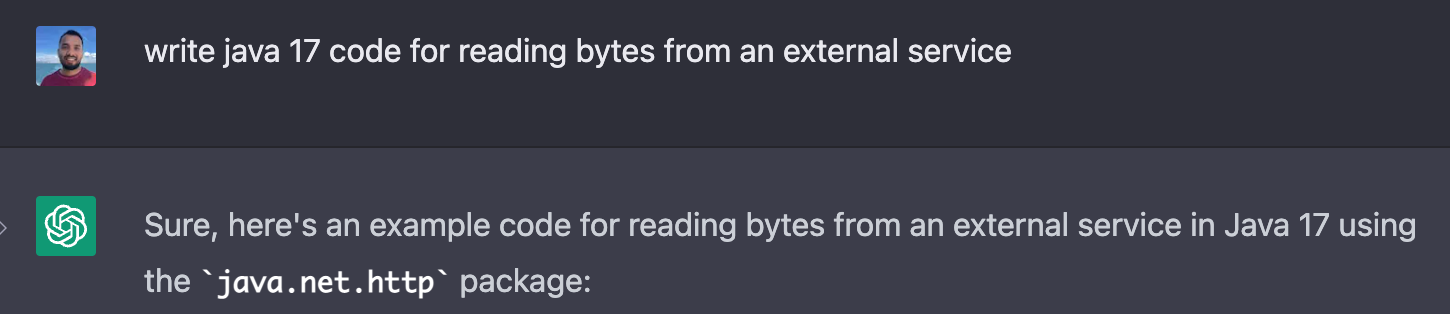

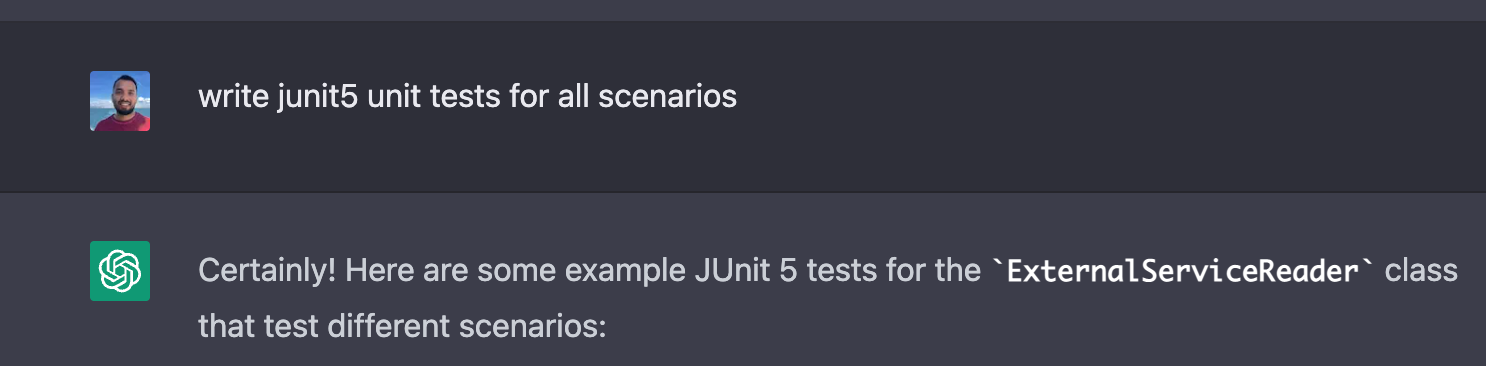

Let’s look at an example. I asked ChatGPT to write Java code to read bytes from an external server. This is what I got.

I copied the generated code into Intellij because the ChatGPT UI was too narrow.

The generated code compiles and runs. It’s not far from production quality. You can easily refactor this code and add things like pre-condition validation and edge case handling to make it production ready.

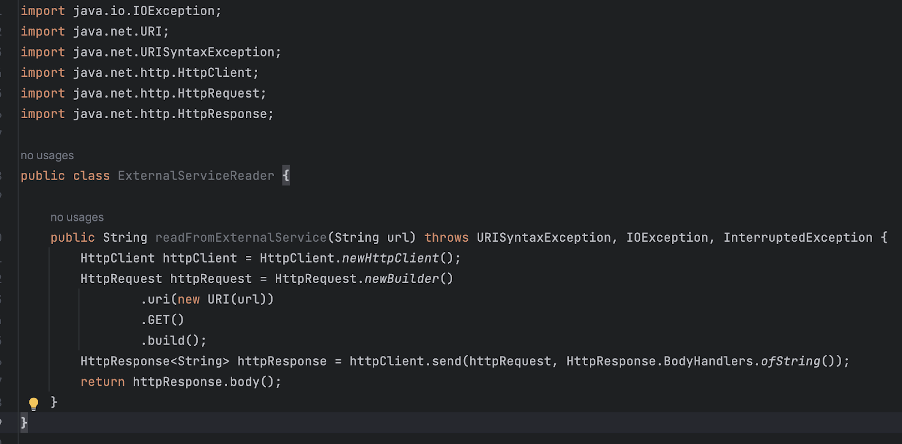

Then I asked to generate unit test cases for the above code. Here’s what I got,

And I asked again. This time it wrote slightly different tests and pointed to example.com.

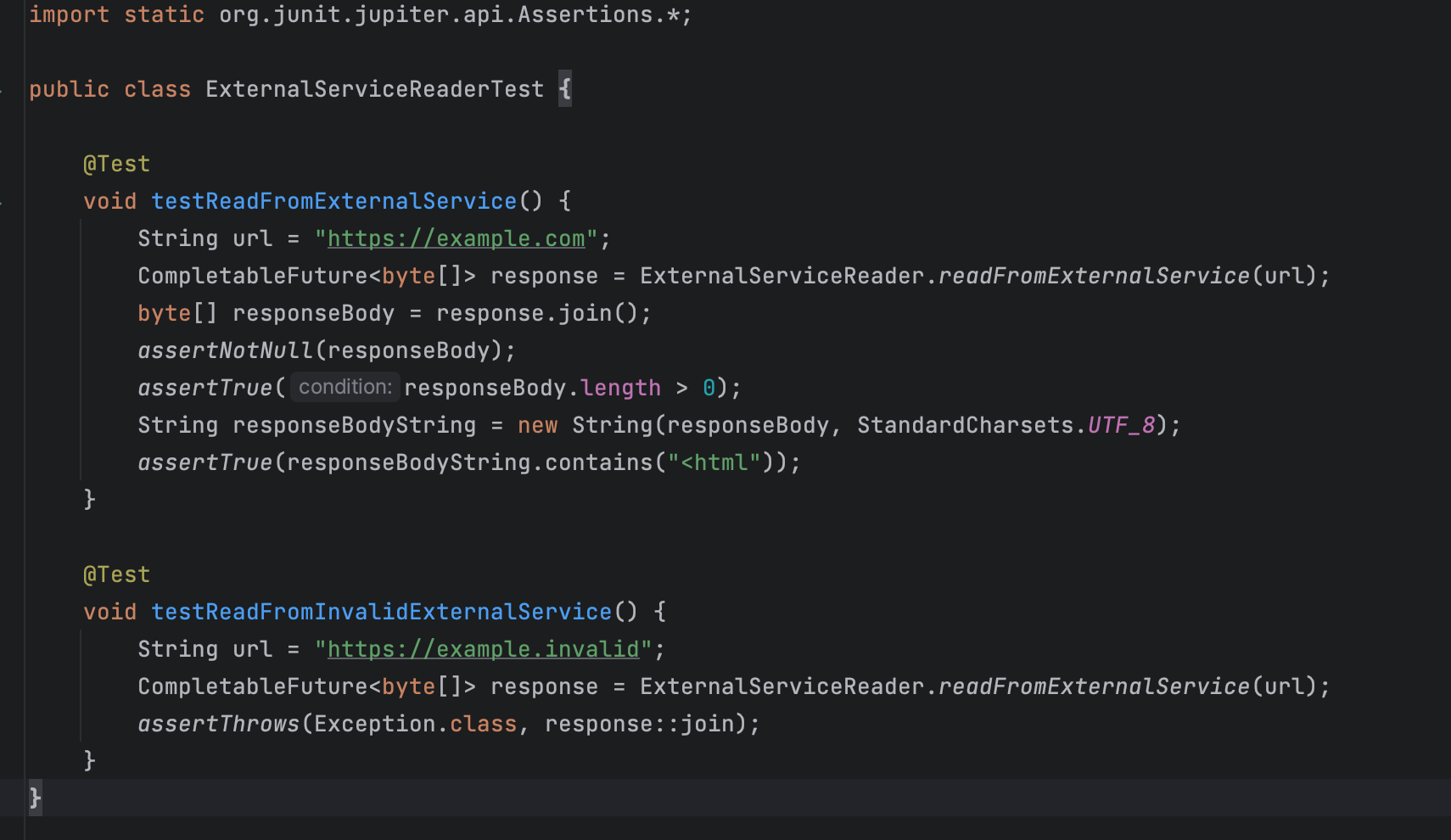

Ok, we got a green build.

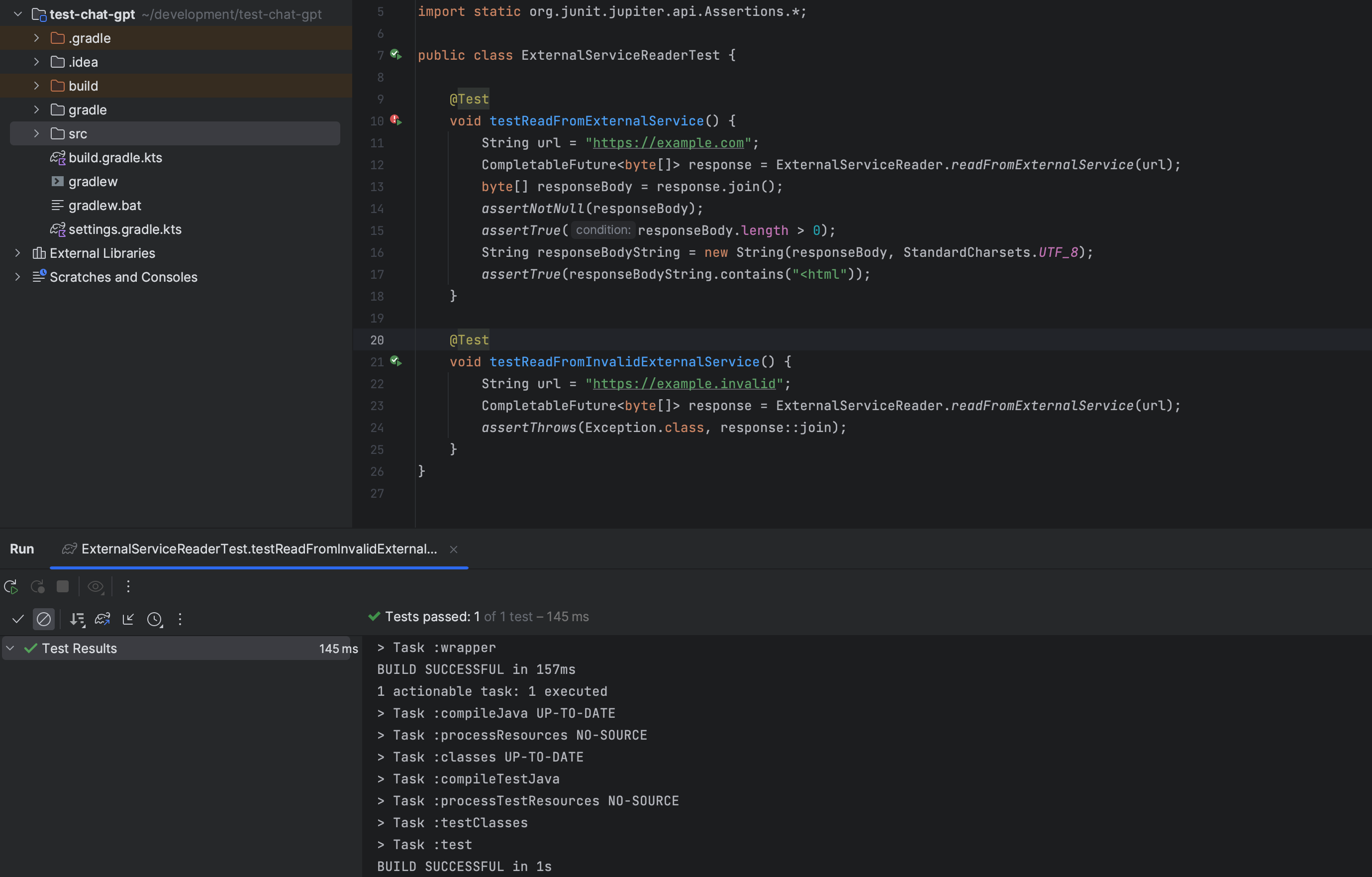

Bingo, ChatGPT thought unit testing with a real dependency was a good idea. After several attempts, it decided it was a good idea to use Mockito to mock the dependencies.

Although, these tests we pretty useless as they didn’t test the subject under test correctly. If you look at the above tests, they violate some of the unit testing best practices, namely,

Isolated - shouldn’t have any dependencies like HTTP calls and should run as self-contained standalone tests.

Fast and Timely - should run in milliseconds. As a side-effect, external dependencies like HTTP calls could make tests slow.

Not-flakey - external dependencies make tests flakey.

Novice developers won’t be aware of what a good test is. If tests passed, they could easily take this generated code. The broader availability of AI code generators certainly reshapes the role of a developer, but the real worry is the quality.

Furthermore, the experienced developers understand the value, when and how to apply proven techniques such as Test Driven Development (TDD), Refactoring, KISS and YAGNI in the right context. However, like any other craft, you will have to master those skills, which is something a new developer will miss if they heavily rely on these AI tools.

At least, until we have more reliable and closer to the Artificial General Intelligence models and control mechanisms, developers should be well aware of the adverse consequences of the AI code generators. Otherwise, as the old saying “Monkey see, Monkey do” goes, future developers could become shallow and mainly rely on these tools to automate their job.

What are the options?

Firstly, I want to emphasise we should reject such advancements in AI to make our lives more productive. My point is developers should be more aware and in control.

On the contrary, correctly understanding how these generative AI models work may not even be feasible because of the complexity of the subject. Therefore, I believe we can be pragmatic and hit the right balance with the following approach;

Continue to follow the proven software engineering practices and developer aids such as static analysis tools as guardrails. Get help from tools like ChatGPT or GitHub CoPilot to increase productivity.

I am going to introduce how we could utilise a well-established technique called Test Driven Development (TDD) and AI code generators.

Let us make TDD and Generative AI work in harmony

As a software developer, I started programming without writing any test cases for the production code. Then, I moved into the stage where I wrote Junit tests as something nice to have after writing the production code. It was pretty much to make our tech leadership and code coverage tools happy. This is still the case with some developers who haven’t seen the true value of automated testing, let alone TDD.

The crux of TDD is not about writing production code. But to get the intent or the mini-specification of the user requirement captured in test cases. This technique is crucial because it makes you think about how to solve the problem, what setup you require, what action to perform and how to verify it before any production code exists. Those test cases are the guardrail that will help you confidently adopt generative tools like Chat GPT. You will be in control as you define the specification for the behaviour you need before asking the generative model to write the code for you.

Also, the Red, Green and Refactor cycle means you only require to ask the generative model to write enough production code to pass the test. Afterwards, you will have the opportunity to refactor the generated code to do the clever bits, such as design changes, and make the code readable and maintainable.

Furthermore, TDD can also organically bring up a modular, loosely coupled design in the code.

Let’s now implement the same requirement following the TDD technique but using the generated production code.

Step 1 - get started with the test

public class ExternalServiceReaderTddTest {

@Test

public void should_read_from_an_external_http_service() {

ExternalServiceReaderTdd externalServiceReader = new ExternalServiceReaderTdd();

byte[] bytes = externalServiceReader.read("http://some-url.test");

assertNotNull(bytes);

}

}

This test is essentially Red, and it won’t even compile. Let’s write the minimum code to compile it.

public class ExternalServiceReaderTdd {

public byte[] read(final String url) {

return new byte[0];

}

}

Step 2 - assert on the expected

@Test

public void should_read_from_an_external_http_service() {

ExternalServiceReaderTdd externalServiceReader = new ExternalServiceReaderTdd();

byte[] bytes = externalServiceReader.read("http://some-url.test");

assertNotNull(bytes);

assertTrue(bytes.length > 0);

assertArrayEquals("some-test-response".getBytes(UTF_8), bytes);

}

This will fail because the actual return value is different to the expected. Let’s now copy the previously generated code.

public class ExternalServiceReaderTdd {

private static final HttpClient httpClient = HttpClient.newHttpClient();

public byte[] read(final String url) throws IOException, InterruptedException {

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create(url))

.GET()

.build();

return httpClient.send(request, HttpResponse.BodyHandlers.ofByteArray()).body();

}

}

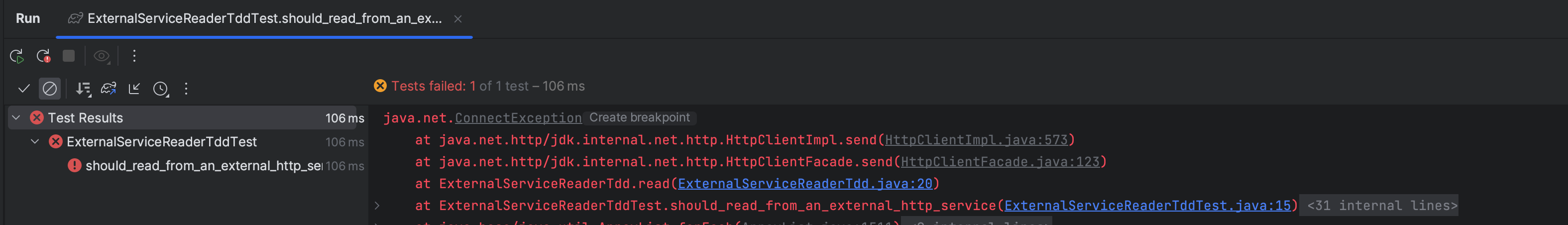

Alright; we have now caught up with the real issue. The code tries to make a real HTTP call and fails.

Step 3 - make the test correct

I want to make the test passes, so I changed the static HttpClient in the production code to be a field and injected it as a constructor parameter. This allows me to mock the HttpClient and prime the required response so I can test the requirement in isolation.

public class ExternalServiceReaderTdd {

private final HttpClient httpClient;

public ExternalServiceReaderTdd(final HttpClient httpClient) {

this.httpClient = httpClient;

}

public byte[] read(final String url) throws IOException, InterruptedException {

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create(url))

.GET()

.build();

return httpClient.send(request, HttpResponse.BodyHandlers.ofByteArray()).body();

}

}

@Test

public void should_read_from_an_external_http_service() throws IOException, InterruptedException {

HttpClient httpClient = Mockito.mock(HttpClient.class);

HttpResponse<byte[]> httpResponse = mock(HttpResponse.class);

byte[] expectedResponse = "some-test-response".getBytes(UTF_8);

when(httpResponse.body()).thenReturn(expectedResponse);

when(httpClient.send(HttpRequest.newBuilder().uri(URI.create("http://some-url.test")).GET().build(),

HttpResponse.BodyHandlers.ofByteArray())).thenReturn(httpResponse);

ExternalServiceReaderTdd externalServiceReader = new ExternalServiceReaderTdd(httpClient);

byte[] bytes = externalServiceReader.read("http://some-url.test");

assertNotNull(bytes);

assertTrue(bytes.length > 0);

assertArrayEquals(expectedResponse, bytes);

}

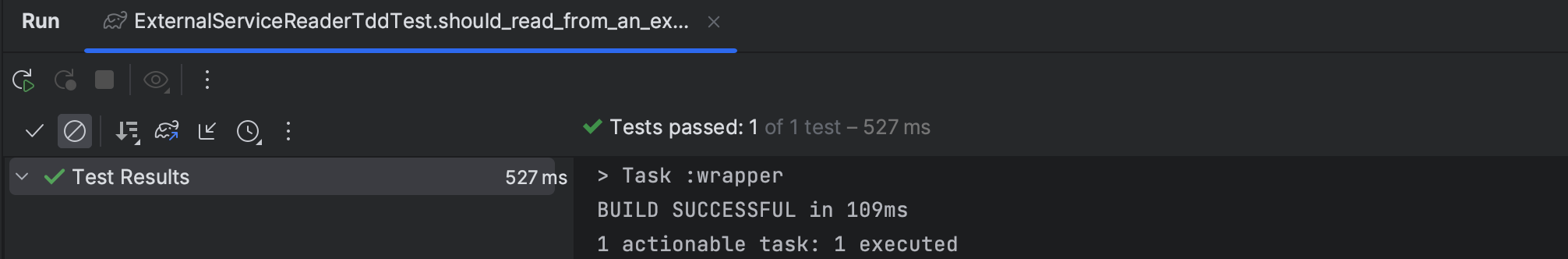

Okay, here’s the green build.

Step 4 - refactor the code and make it tidier.

public class ExternalServiceReaderTdd {

private final HttpClient httpClient;

public ExternalServiceReaderTdd(final HttpClient httpClient) {

this.httpClient = httpClient;

}

public byte[] read(final String url) throws IOException, InterruptedException {

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create(url))

.GET()

.build();

return httpClient.send(request, BodyHandlers.ofByteArray()).body();

}

}

@Test

public void should_read_from_an_external_http_service() throws IOException, InterruptedException {

HttpClient mockedHttpClient = Mockito.mock(HttpClient.class);

HttpResponse<byte[]> mockedHttpResponse = mock(HttpResponse.class);

byte[] expectedResponse = "some-test-response".getBytes(UTF_8);

when(mockedHttpResponse.body()).thenReturn(expectedResponse);

when(mockedHttpClient.send(HttpRequest.newBuilder().uri(URI.create("http://some-url.test")).GET().build(),

HttpResponse.BodyHandlers.ofByteArray())).thenReturn(mockedHttpResponse);

ExternalServiceReaderTdd externalServiceReader = new ExternalServiceReaderTdd(mockedHttpClient);

byte[] actualResponse = externalServiceReader.read("http://some-url.test");

assertNotNull(actualResponse);

assertArrayEquals(expectedResponse, actualResponse);

}

We could check this code in.

Is this textbook TDD? No, I’d say this is a modified version of it. I didn’t write the production code, and the generated code influenced the choice of HttpClient. But the most important takeaway is as a developer, I need to be in control and confident of the code I write. ChatGPT helps me to increase my productivity, and TDD helps me with producing deterministic and testable code.

What’s Next?

I hope to explore other options to bring control and confidence into the era of coding with AI code generation tools.

References

Yann LeCun, Philosophy of Deep Learning, NYU, 2023-03-24

https://www.pcmag.com/news/samsung-software-engineers-busted-for-pasting-proprietary-code-into-chatgpt